Batch download images from a website

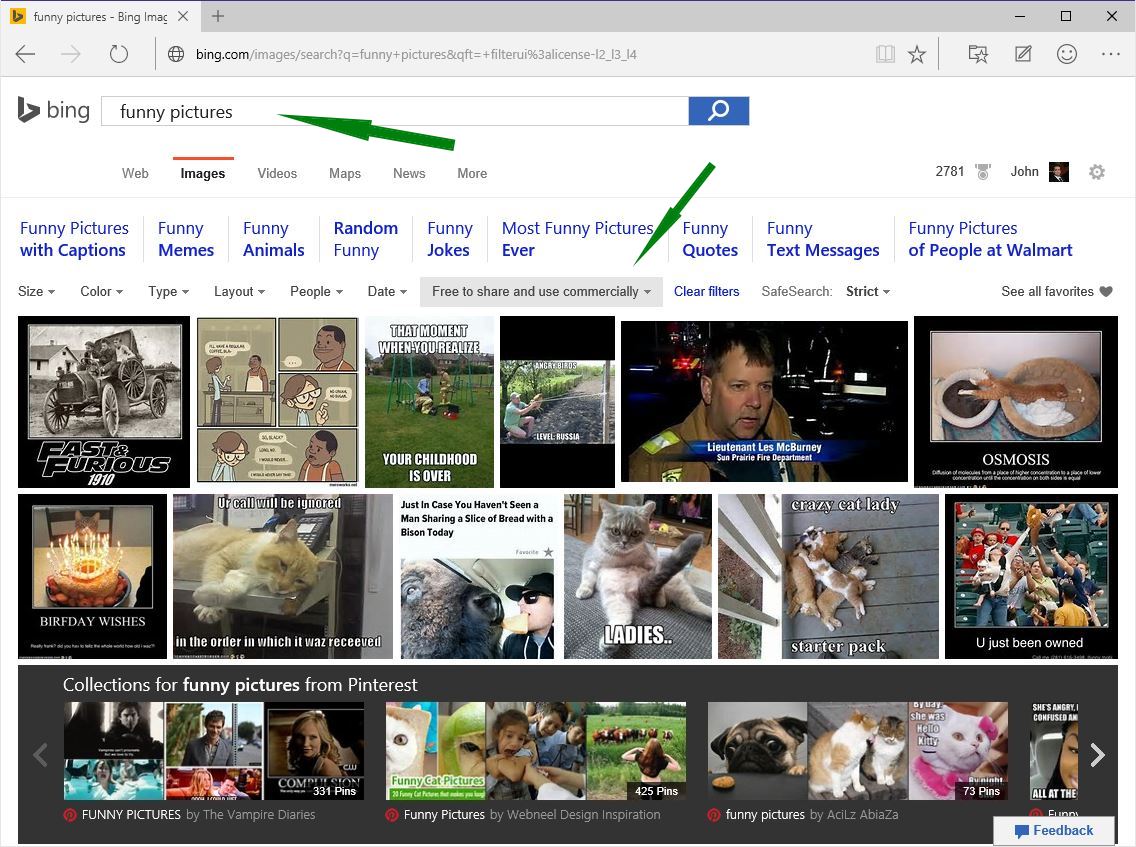

Time for another quick tip. Today let's write a simple PowerShell script to download a bunch of images from a website. As an example, I will use Bing to search for 'funny pictures' and where the resulting images are 'Free to share and use commercially'. This can be quite useful when you are looking for images to use on your projects, presentations, websites, whatever. Just a small disclaimer, anyone is absolutely free to use and modify the PowerShell script I have written for this post, however I won't be held responsible for how it's used. Any license infringements will be the responsibly of the person executing the script.

Before we jump into the script, let's break down the problem in tiny little actions. If you had to do this manually, this were the steps you would need:

- open a web browser (Chrome, FireFox, IE, Edge or however you prefer)

- go to Bing.com

- select 'Images'

- set the license filter to 'Free to share and use commercially'

- search for 'funny pictures'

- select an image (by clicking on it)

- right-click on the image

- click on 'Save image as...'

- select a folder to download the image

- repeat steps 6 to 9 until you get all the images you want

This a lot of work, so let's use PowerShell to this job for us. If you aren't familiar with PowerShell, you can simply open "Windows PowerShell ISE" on your computer, copy-paste my script and run it. Now, let's break down the same problem in smaller actions that PowerShell will execute:

- we will need a Web Client to download HTML and image from Bing

- we will also need to construct the URL with desired search options

- download the HTML from Bing's results page

- use a regular expression to look for URLs terminating in '.jpg' or '.png'

- create a folder on your computer, to store the downloaded images

- download each image individually

Now, here's the resulting script:

# script parameters, feel free to change it

$downloadFolder = "C:\Downloaded Images\"

$searchFor = "funny pictures"

$nrOfImages = 12

# create a WebClient instance that will handle Network communications

$webClient = New-Object System.Net.WebClient

# load System.Web so we can use HttpUtility

Add-Type -AssemblyName System.Web

# URL encode our search query

$searchQuery = [System.Web.HttpUtility]::UrlEncode($searchFor)

$url = "http://www.bing.com/images/search?q=$searchQuery&first=0&count=$nrOfImages&qft=+filterui%3alicense-L2_L3_L4"

# get the HTML from resulting search response

$webpage = $webclient.DownloadString($url)

# use a 'fancy' regular expression to finds Urls terminating with '.jpg' or '.png'

$regex = "[(http(s)?):\/\/(www\.)?a-z0-9@:%._\+~#=]{2,256}\.[a-z]{2,6}\b([-a-z0-9@:%_\+.~#?&//=]*)((.jpg(\/)?)|(.png(\/)?)){1}(?!([\w\/]+))"

$listImgUrls = $webpage | Select-String -pattern $regex -Allmatches | ForEach-Object {$_.Matches} | Select-Object $_.Value -Unique

# let's figure out if the folder we will use to store the downloaded images already exists

if((Test-Path $downloadFolder) -eq $false)

{

Write-Output "Creating '$downloadFolder'..."

New-Item -ItemType Directory -Path $downloadFolder | Out-Null

}

foreach($imgUrlString in $listImgUrls)

{

[Uri]$imgUri = New-Object System.Uri -ArgumentList $imgUrlString

# this is a way to extract the image name from the Url

$imgFile = [System.IO.Path]::GetFileName($imgUri.LocalPath)

# build the full path to the target download location

$imgSaveDestination = Join-Path $downloadFolder $imgFile

Write-Output "Downloading '$imgUrlString' to '$imgSaveDestination'..."

$webClient.DownloadFile($imgUri, $imgSaveDestination)

}

You can also view the script on GitHub. Enjoy!